How To Configure Nginx as a Load Balancer

Last Updated on January 8, 2023

What is load balancing?

Load balancing involves effectively distributing incoming network traffic across a group of backend servers. A load balancer is tasked with distributing the load among the multiple backend servers that have been set up. There are multiple types of load balancers

- Application Load balancer

- Network Load Balancer

- Gateway load balancer

- Classic Load balancer

There are multiple load balancer examples and all have different use cases

In this article, I am going to cover Nginx as a load balancer.

What Is Nginx Load Balancing?

Nginx is a high-performance web server which can also be used as a load balancer. Nginx load balancing refers to the process of distributing web traffic across multiple servers using Nginx.

This ensures that no single server is overloaded and that all requests are handled in a timely manner. Nginx uses a variety of algorithms to determine how to best distribute traffic, and it can also be configured to provide failover in case one of the servers goes down.

You can use either Nginx open source or Nginx Plus to load balance HTTP traffic to a group of servers.

Personally, I use Nginx open source to set up my load balancers and that is what I am going to show you in this article.

Advantages of load balancing

Load balancing helps in scaling an application by handling traffic spikes without increasing cloud costs. It also helps remove the problem of a single point of failure. Because the load is distributed, if one server crashes, the service would still be online.

Configuring Nginx as a load balancer

We can follow the steps to configure the Nginx load balancer.

Installing Nginx

The first step is to install Nginx. Nginx can be installed in Debian, Ubuntu or CentOS. I am going to use Ubuntu which I have configured on my Contabo VPS.

sudo apt-get update

sudo apt-get install nginx

Configure Nginx as a load balancer

The next step is to configure Nginx. We can create a new configuration file for the load balancer.

cd /etc/nginx/sites-available/

sudo nano defaulthttp {

upstream app{

server 10.2.0.100;

server 10.2.0.101;

server 10.2.0.102;

}

# This server accepts all traffic to port 80 and passes it to the upstream.

# Notice that the upstream name and the proxy_pass need to match.

server {

listen 80;

server_name mydomain.com;

location / {

include proxy_params;

proxy_pass http://app;

proxy_redirect off;

proxy_http_version 1.1;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection "upgrade";

}

}

}We will need to define the upstream directive and server directive in the file. Upstream defines where Nginx will pass the requests upon receiving them. It contains upstream server group (backend) IP addresses to which requests can be sent to based on the load balancing method chosen. By default, Nginx uses a round-robin load balancing method to distribute the load across the servers.

The server segment defines the port 80 through which Nginx will receive requests. It also contains a proxy_pass variable

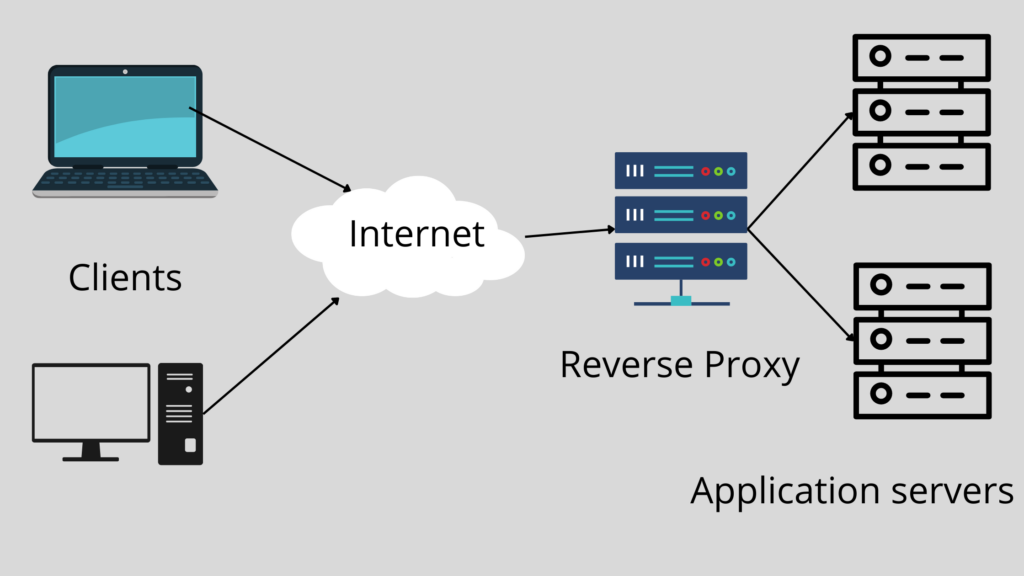

The proxy_pass variable is used to tell NGINX where to send traffic that it receives. In this case, the proxy_pass variable is set to point to 3 servers. This tells NGINX to forward traffic that it receives to any of the upstream servers’ IPs provided. Nginx acts as both a reverse proxy and a load balancer.

A reverse proxy is a server that sits in between backend servers and intercepts requests from clients.

Selecting a load-balancing method

The next step is to determine the load balancing method to use. There are multiple load balancing methods we can use. They include:

Round Robin

Round Robin is a load balancing method where each server in a cluster is given an equal opportunity to process requests. This method is often used in web servers, where each server request is distributed evenly among the servers.

The load is distributed in rotation meaning that each server will have its time to execute a request. For example, if you have three upstream servers, A, B and C, then the load balancer will first distribute the load to A then to B and finally to C before redistributing the load to A. It is fairly simple and does have its fair share of imitations.

One of the limitations is that you will have some servers idle simply because they will be waiting for their turn. In this example, if A is given a task and executes it in a second, it would then mean it would be idle until it is next assigned a task. By default, Nginx uses round robin to distribute load among servers.

Weighted Round Robin

To solve the issue of server idleness, we can use server weights to instruct Nginx on which servers should be given the most priority. It is one of the most popular load balancing methods used today.

This method involves assigning a weight to each server and then distributing traffic among the servers based on these weights. This ensures that servers with more capacity receive more traffic, and helps to prevent overloading of any one server.

This method is often used in conjunction with other methods, such as session Persistence, to provide an even distribution of load across all servers. The application server with the highest weight parameter will be given priority(more traffic) as compared to the server with the least number(weight).

We can update the Nginx configuration to include the server weights

http {

upstream app{

server 10.2.0.100 weight=5;

server 10.2.0.101 weight=3;

server 10.2.0.102 weight=1;

}

server {

listen 80;

location / {

proxy_pass http://app;

}

}

}Least Connection

The least connection load balancing method is a popular technique used to distribute workloads evenly across a number of servers. The method works by routing each new connection request to the server with the fewest active connections. This ensures that all servers are used equally and that no single server is overloaded.

http {

upstream app{

least_conn;

server 10.2.0.100;

server 10.2.0.101;

server 10.2.0.102;

}

server {

listen 80;

location / {

proxy_pass http://app;

}

}

}Weighted Least Connection

The Weighted Least Connection load balancing method is a technique used to distribute workloads across multiple computing resources, such as servers, in order to optimize performance and minimize response times. This technique takes into account the number of active connections each server has and assigns weights accordingly. The goal is to distribute the workload in a way that balances the load and minimizes response times.

http {

upstream app{

least_conn;

server 10.2.0.100 weight=5;

server 10.2.0.101 weight=4;

server 10.2.0.102 weight=1;

}

server {

listen 80;

location / {

proxy_pass http://app;

}

}

}IP Hash

The IP Hash load balancing method uses a hashing algorithm to determine which server should receive each incoming packet. This is useful when multiple servers are behind a single IP address and you want to ensure that each packet from a given client IP address is routed to the same server. It takes the source IP address and destination IP address and creates a unique hash key. It is then used to allocate a client to a particular server.

This is very important in the case of canary deployments. It allows us as developers to roll out changes to a subsection of users so that they can test and provide feedback before shipping it out as a feature to all the users.

The advantage of this approach is that it can provide better performance than other methods, such as round-robin.

http {

upstream app{

ip_hash;

server 10.2.0.100;

server 10.2.0.101;

server 10.2.0.102;

}

server {

listen 80;

location / {

proxy_pass http://app;

}

}

}URL Hash

URL Hash load balancing also uses a hashing algorithm to determine which server will receive each request based on the URL.

It is also similar to the Ip Hash load balancing method but the difference here is that we hash specific URLs as opposed to hashing IPs.This ensures that all requests are evenly distributed across the servers, providing improved performance and reliability.

Restart Nginx

Once you have configured the load balancer with the correct load balancing method, you can restart Nginx for the changes to take effect.

sudo systemctl restart nginx

Proxying HTTP traffic to a group of servers

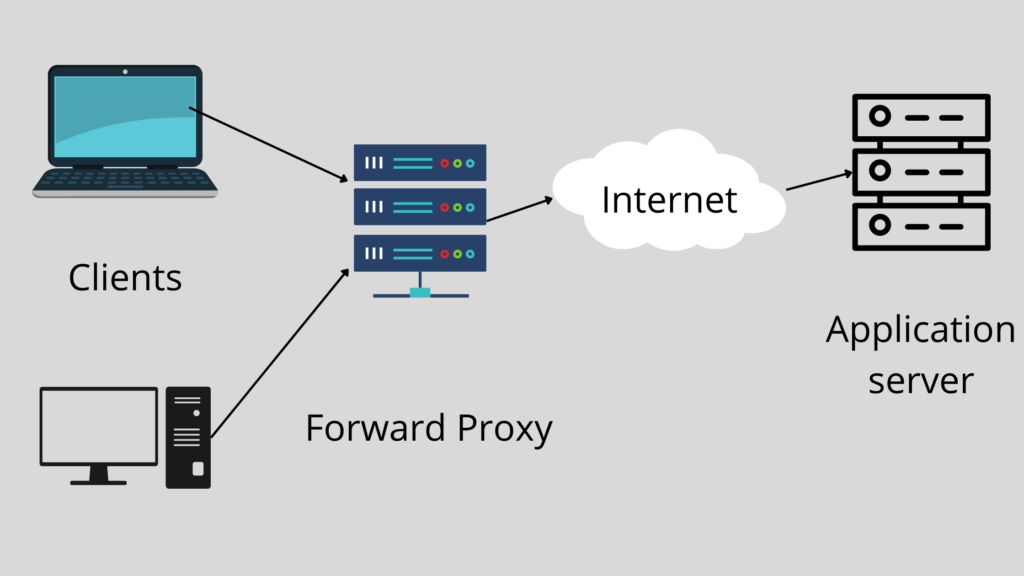

A proxy server is a server that acts as an intermediary between a client and another server. A proxy server can be used to allow clients to access a group of servers, such as a group of web servers, by forwarding requests from the client to the appropriate server.

The proxy server can also provide caching, which can improve performance by reducing the need to send requests to the server for each page view. Nginx acts can act as a Forward proxy or a reverse proxy. The difference between a forward proxy and a reverse proxy is that a forward proxy sits in front of multiple clients and a reverse proxy sits in front of multiple backends.

A forward proxy protects the identity of the clients while a reverse proxy protects the identity of the backend servers.

Load balancing with HTTPS enabled

Load balancing with HTTPS enabled is a great way to improve the performance of your website. By using a load balancer, you can distribute the load of your website across multiple servers, which can help to reduce the strain on any one server.

Additionally, by enabling HTTPS, you can ensure that all communications between the servers and visitors to your site are encrypted, which can help to keep your site safe and secure.

It does come with its fair share of problems. On problem is that for each request a TLS Handshake and a DNS lookup have to occur which might lead to delayed responses.

I personally have my backend application servers communicate with the load balancer using HTTP(SSL Termination) and the load balancer communicates with the clients through HTTPS. This way, there are no TLS Handshakes and DNS Lookups to be considered when routing requests to application servers.

Health checks

Load balancers are often used to distribute incoming traffic across a group of servers, in order to avoid overloading any single server. Health checks are a vital part of ensuring that the load balancer is able to correctly route traffic, as they can help to identify when a server is down or not functioning properly.

Nginx can monitor the health of our HTTP servers in an upstream group. Nginx can perform Passive Health checks and Active health checks.

Passive health checks

NGINX analyzes transactions as they take place and tries to restore failed connections for passive health checks. NGINX identify the server as unavailable and temporarily halt transmitting requests to it until it is deemed active again if the transaction still cannot be resumed.

For an upstream server to be marked as unavailable, we need to define two parameters in the upstream directive; failed_timeout and max_fails.

failed_timeout sets the time during which a number of failed attempts must happen for the server to be marked unavailable.

max_fails sets the number of failed attempts.

upstream app{

server 10.2.0.100 max_fails=3 fail_timeout=60s;

server 10.2.0.101;

server 10.2.0.102;

}Active Health Checks

NGINX can periodically check the health of upstream servers by sending special health‑check requests to each server and verifying the correct response.

server {

location / {

proxy_pass http://app;

health_check;

}

}I have not used Active health checks as they seem to be applicable for Nginx Plus. You can read more on Active health checks here.

Self-managed load balancer vs. managed service

There are two main types of load balancers: self-managed and managed. Self-managed load balancers are usually less expensive, but require more technical expertise to set up and maintain. Managed load balancers are more expensive but often come with more features and support.

If you want to sleep well at night 😂 then managed load balancers are way better as they remove the headache of constantly debugging.

Limiting the number of connections

One way to improve the performance of a load balancer is to limit the number of connections that it tries to establish to each server. This can prevent the load balancer from overloading any one server and can help to ensure that each server has a fair share of the traffic.

Yes, reverse proxy and load balancer are similar in many ways. Both can be used to improve the performance and availability of a website or application. Both can also be used to distribute traffic among multiple servers.

Conclusion

Load balancing is a good way to distribute requests across application instances. It provides high availability and ensures server health is always high. Using the Nginx load balancer to distribute load is beneficial as it serves as both a reverse proxy and a load balancer. It is also open source and thus you can get more from Nginx. I hope this article was able to help you set up an Nginx load balancer. If you have any questions feel free to ask them in the comment section. Thank you for reading.